Refreshing connections

This feature is available starting with DataManagement version 9.2.1.

This feature is designed to assist SDK tools that cache information from a service or database. For example, a database-loading tool may query the target table schema, and in order to avoid the overhead reading this all the time, may cache the previous result. However, this can lead to issues when the target table changes. To handle this situation, override the refreshConnection() method of the Tool base class and clear any such cached information.

@Override

public void refreshConnection() {

tableSchema = null;

}

Writing tools that target Hadoop

The HDFS temp folder

If you want to write temp files into HDFS (or other Hadoop filesystem), use the URL from: System.getProperty(“rpdm.hadoop.tmpdir”).

Tools that appear in the Parallel Section input and output bars

Deferred

Normally, SDK tool appear in the palette and can be dragged onto the canvas at design time. However, you can create a special type of SDK tool that appears in the Parallel Section input or output bars instead. This requires some special design consideration to make everything work correctly, and is not a simple endeavor.

Input tool

To make an SDK tool that will appear in the Parallel Section’s input bar and menu, your tool class must implement IsParallelSectionInput and its methods.

class MyInputTool extends GeneralTool implements IsParallelSectionInput {

}

Integrating with RPDM’s Hadoop security

If you are writing a tool that interfaces with Hadoop, you might want to take advantage of the fact the RPDM has already dealt with the security issues of authenticating with Hadoop, renewing tokens, delegating tokens to YARN tasks, and dealing with the differences between Hadoop vendors and versions. This integration doesn’t happen automatically, however, because by default every SDK tool jar exists in its own classloader space, meaning that it cannot share the UserGroupInformation singleton managed by RPDM.

Specify the Hadoop “base module”

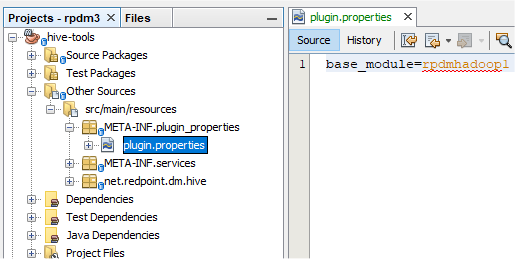

To integrate with RPDM’s Hadoop security, you should first create a resource file in your project named /META-INF/plugin_properties/plugin.properties. In this file, add a single line containing base_module=rpdmhadoop1.

In the Netbeans IDE this looks like the following image.

Doing so makes your SDK tool’s ClassLoader “inherit” from the ClassLoader used by RPDM’s Hadoop module, making things like the Hadoop Configuration and FileSystem available.

Make all Hadoop dependencies “provided” scope in pom.xml

If your SDK jar is built using maven shading, this makes your tool to pick up the Hadoop jars of the defined cluster, rather than whatever jars are referenced as dependencies.

Example:

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>${hive-jdbc-version}</version>

<scope>provided</scope>

</dependency>