This page provides a list of Redpoint Data Management known issues and available workaround solutions.

|

ID |

Issue |

Workaround |

|---|---|---|

|

DM-17008 |

Data Management’s Snowflake Bulk data provider interprets the literal string “NULL” or “null” as a logical |

Customers whose business logic depends on inserting a literal “NULL” or “null” are advised to use the non-bulk Snowflake data provider and set Insert type to Single record or Block. |

|

DM-14623 |

Projects created in earlier Data Management versions store Azure Storage Queue plaintext secrets. Prior to Data Management 9.4.8, the Connection string and SAS token properties for Azure Storage Queue tools were presented in the Properties panel as Text fields. Consequently, when the tool configuration was persisted, values were stored as plain-text. Beginning In Data Management 9.4.8, the Connection string and SAS token properties are treated as secrets and presented in the Properties panel as Password fields. This ensures those properties are encrypted when persisted in the tool configuration. However, Data Management cannot programmatically migrate plain-text properties to encrypted secrets in existing projects or shared settings. |

Manually reconfigure the Connection string and SAS token properties in projects that contain Azure Storage Queue tools, and in shared settings under Repository > Settings > Tools > Azure Storage Queue. |

|

DM-12522 |

Retype tool configuration does not convert new fields. Property auto-commit might disable the Convert new fields option when the Change Field Types tool is selected on the canvas. If new fields are detected in the upstream record during a subsequent project execution, it might not apply the specified Data type for new fields. |

Commit the Change Field Types tool’s properties using the Commit button each time the Change Field Types tool is selected. |

|

DM-14135 |

SQL Server Bulk Copy insert type fails when table contains NVARCHAR(MAX) columns. Due to a limitation in JDBC, the SQL Server Bulk Copy insert type, does not support NVARCHAR columns whose lengths exceed 4000 characters. |

Modify your table’s DDL. You can:

If you cannot modify your table’s DDL, use the RDBMS Output tool’s Block insert type. |

|

DM-13908 |

SQL Server Bulk Copy insert type cannot be used when table includes timestamp column. The SQL Server Bulk Copy insert type cannot be used when the table includes a column whose data type is timestamp or rowversion. This type is generally used as a mechanism for version-stamping table rows with an incrementing number that does not actually represent a date or time. |

Use the block inert type and omit timestamp and rowversion columns from the schema mapping. |

|

DM-953 |

Using built-in SQL functions with RDBMS tool configured for Oracle JDBC causes error. Due to an issue with Oracle’s JDBC driver, SQL statements that include Prepared Statement parameter replacement may fail with an error, because the JDBC driver is unable to replace parameters used as arguments to a SQL function in a Prepared Statement. For example, this statement:

will get the following error:

|

Do not use Prepared Statement parameter replacement with Oracle/JDBC. |

|

DM-4850 |

Mismatch between Site Server and Execution Server security settings causes failures. It is possible to create a multi-host Data Management site with incompatible security settings. An Execution Server configured with different core security settings than its Site Server will not run correctly. |

Check that the Data Management configuration file CoreCfg.properties (located in the Data Management installation folder) has the same core security settings as the Site Server for the following properties:

If necessary, stop the Execution service, edit the |

|

DM-4939 |

Errors in tools embedded within macros are not shown in when “Show errors for selected tool only” is enabled. The Show errors for selected tool only filter option on the Message Viewer does not display errors in tool that are contained within a selected macro. |

None. |

|

DM-5025 |

Cannot change repository view to specific time. Setting the Site Server’s Repository View property to a defined Label or Specific time has no effect. |

Open a specific version from the Version tab on the repository object’s Properties pane. |

|

DM-5160 |

When Advanced Security is enabled, logons with no domain get an error. On Windows, if advanced security mode is enabled, users with logons that do not include a domain (for example, username instead of domain\username) may be unable to open or create projects and automations, and may see an error message like errno6. |

Either specify a Default domain in Site Settings, or specify a fully qualified OS User for each Data Management user. |

|

DM-5456, DM-5491 |

Oracle identifiers are case-sensitive, which can cause bad behavior when there’s a case mismatch. Oracle identifiers are case-sensitive, which can cause problems when using RDBMS Output tools configured to use the Oracle JDBC driver. |

RDBMS Output tools writing to Oracle should enclose identifiers in quotes. |

|

DM-5873 |

Repository archive created with Version 9.0.2 client and Version 9.0.1 server cannot be loaded Repository archives created using a Version 9.0.2 Data Management client and a Version 9.0.1 Site Server are empty and cannot be used with the Load Archive command. |

Upgrade the Data Management Site Server to Version 9.0.2 or later. |

|

DM-7083 |

In SQL Server, conversion of a datetime2 data type to a datetime data type results in out-of-range value. In SQL Server, attempting to update tables containing datetime2 fields may result in improper truncation of data when the Update option to Use temp table is set to Always or Batch Only. Data Management supports Insert and Update into existing tables with datetime2 fields but does not support creating tables with datetime2 fields. |

Pre-create the temp table with the desired datetime2 field, instead of allowing Data Management to create it for you. |

|

DM-7508 |

JDBC error reading from table with special characters in field name An RDBMS Input tool configured to perform a direct table read using a SQL Server provider may return an Invalid column schema error when one of the target fields contains special characters. |

Instead of using a direct table read, use Select * or Select [field_name]. |

|

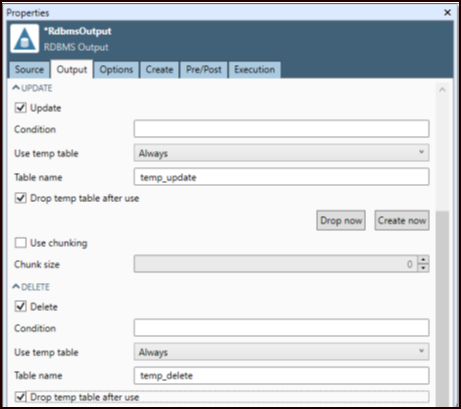

DM-7992 |

Google BigQuery is slow when temporary tables aren't used for update and delete. An RDBMS Output tool performing update or delete operations on a Google BigQuery target will run very slowly unless it is configured to use temp tables. |

Configure the RDBMS Output tool to use temp tables on output, as shown below:

|

|

DM-8530 |

RDBMS Output tool does not consider character encoding when specifying column lengths for automatically created tables. VARCHAR columns will accept multi-byte UTF-8 characters. However, in many RDBMS, VARCHAR column lengths are measured in bytes rather than characters. If you’re storing 10 single-byte characters in a VARCHAR column, its length need only be 10 bytes. However, If you’re storing double-byte characters, you’ll need 20 bytes. The Automatically create missing tables option in the RDBMS Output tool determines the table schema using the input record, but does not account for the storage requirements of multi-byte characters when calculating the length of VARCHAR columns. The resulting DDL may create a table whose VARCHAR column lengths are insufficient for the record data. |

Avoid using the Automatically create missing tables option when working with records that may contain multi-byte characters. Instead, select the Script table creation for missing tables option and edit the resulting DDL to ensure column lengths adequate to storing the field values. |

|

DM-8533 |

RDBMS Input tool missing input node. In Version 9.1.1 and later, the Input connector that provides input to dynamic queries for the RDBMS Input tool is no longer displayed by default. |

To display the dynamic query input connector, insert a row in the Dynamic Query data grid, specify the SQL field, and click Commit. |

|

DM-8778 |

RDBMS Output tool cannot display empty schemas. Schemas that don't contain any tables are not included in the RDBMS Output tool’s list of available Schemas. This can cause problems when the RDBMS Output tool is configured to Automatically create missing tables and no tables exist in the destination schemas. |

Manually enter the empty schema’s name in the Schema property, and the new table name in the Table property. Once the project is run and the new table created, select Refresh tables to display both the schema and the new table. |

|

DM-8784 |

Long-running projects authenticating to Azure services via OAuth2 may be impacted by default token expiry By default, OAuth2 tokens in Azure expire after an hour. This can create problems for long-running Data Management projects authenticating to Azure services via OAuth2. |

Use a different authorization method (ADL2 access key, or ABS connection string), increase the token lifetime in the Azure portal, or edit the project to write smaller files. |

|

DM-8792 |

Abandoned multipart uploads to cloud storage can leave behind blob parts or temp files. If you cancel a write operation to cloud storage, the "parts" of the incomplete multi-part upload are not deleted immediately. |

Amazon and Azure have garbage collection facilities to remove these parts after a period of time, but you may need to configure your storage provider to enable this. Google Cloud Storage does not appear to have a garbage collection facility, so you will need to use the storage manager web page to periodically check for uploads and remove them. |

|

DM-8845 |

Configuration locator may not display tool/macro names for older projects. If you attempt to reconfigure a pre-version-9.4.1 project or macro from an automation, the tool icons are shown but not the tool names, making it difficult to distinguish multiple instances of the same tool type. |

In the current Data Management version, open the old project or macro and then save it. |

|

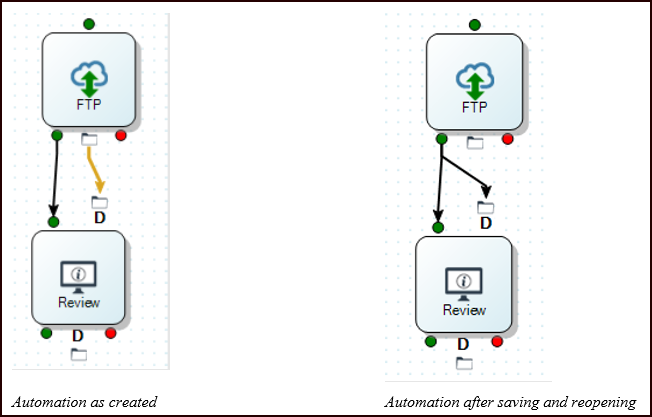

DM-8934 |

Automation connector displays incorrectly. Certain automation connections may display connectors incorrectly when the project is saved and reopened.

|

The automation will execute correctly. No workaround is necessary. |

|

DM-10508 |

RDBMS Output tool schema mismatch when inserting very large Unicode or TextVar fields. An RDBMS Output tool configured with any JDBC provider will fail with a schema mismatch error when an upstream Unicode or TextVar field sized ~10MB (≥10388608 bytes) is mapped to a database column. |

Reduce the configured size of the upstream TextVar field to less than 10MB (10388608 bytes). |