In a few specific cases, you can increase throughput by splitting the record stream and processing the split streams separately. Parallel throughput only helps if three conditions are true:

-

There is a single slow tool or macro that is bottlenecking the process

-

There is no interaction between the records processed in the slow step (i.e. no matching)

-

Data Management is using less than 50% of total CPU during this slow step

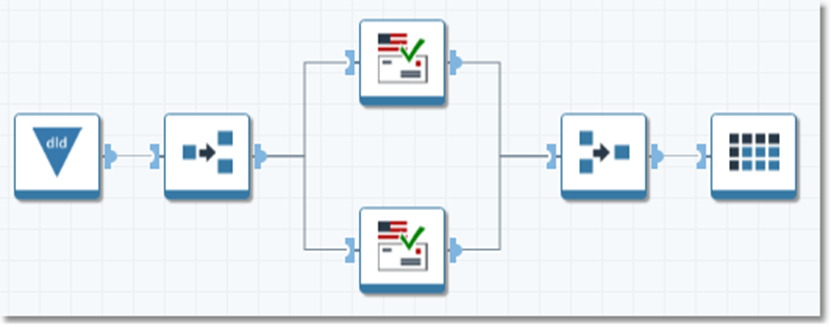

The most likely candidates for this treatment are the Standardize Address tool, the Geocoder tool, and the Window Compare tool. To leverage more CPUs, do the following:

-

Add a Splitter tool configured for a Round-robin type split.

-

Replicate the slow tool or macro by cut/paste.

-

Connect together as shown below, with a Merge tool configured for a Round-robin type merge.

-

If a Standardize Address or Geocoder tool is being replicated, clear the Share library option on the Options tab of both tools. If you leave the libraries shared (the default setting), Data Management will serialized access to the libraries.

-

Finally, measure the results in a controlled test. These may be surprising. Because parallel throughput depends on so many factors of computer architecture, cache, and memory, you may find that this strategy doesn't always help. Typically, you should expect to see a 50% to 75% increase in throughput by doubling the processing. We normally find that tripling the processing does not have a significant advantage over doubling.