Overview

The JSON Input tool reads JSON-formatted records and transforms nested data into a relational data model. The tool produces multiple outputs. The number of outputs and their kinds (labels) is determined by the tool's configuration.

To automate output definition, you can import a predefined schema specifying field names, field descriptions, and (optionally) field sizes.

Linkage fields (ID fields that associate parent and child records) must be of type Integer (4).

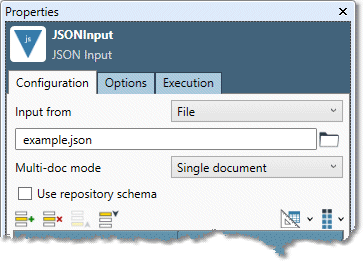

JSON Input tool configuration parameters

The JSON Input tool has two sets of configuration parameters in addition to the standard execution options.

Configuration

|

Parameter |

Description |

|---|---|

|

Input from |

The source of the data:

|

|

Input file |

If Input from is File, the file containing the records, or a wildcard pattern matching multiple files. |

|

Input field |

If Input from is Field, the field from which the data will be read. If you are connecting to an upstream Web Service Input tool, this is |

|

Include input fields |

If Input from is Field, optionally passes through the "extra" input fields to the "root" record. This can be useful in certain kinds of processing where it is desirable to carry through identifying information that is not represented in the JSON documents. |

|

Multi-doc mode |

How to process input files or fields that contain multiple JSON documents:

|

|

Use repository schema |

If selected, configure the field layout using the specified Schema instead of configuring fields directly. |

|

Schema |

If Use repository schema is selected, a schema must be specified. |

|

Record |

Record specification defining an output of the tool. This is difficult to configure manually. See Configuring the JSON Input tool. |

|

Path |

Sequence of identifiers separated by |

Options

|

Parameter |

Description |

|---|---|

|

Limit records |

If selected, limits the number of records read. |

|

Read only the first |

If Limit records is selected, specifies the number of records to be read. |

|

Produce file name field |

If selected, the file name will be output as a record field. |

|

Output full path |

If Produce file name field is selected, optionally outputs the entire path to the record file name field. |

|

Output URI path |

If Output full path is selected, express path as a Uniform Resource Identifier (URI). |

|

Field name |

If Produce file name field is selected, name of the column to be used for the file name. This is optional and defaults to |

|

Field size |

If Produce file name field is selected, size of the field to be used for the file name. This is optional and defaults to |

|

Flatten value arrays |

If selected before analyzing the data, changes the interpretation of simple arrays of values to produce a single output in which array values are combined into a single field and separated by commas, rather than sent to a separate output as a child relation of the root document. |

|

Error handling |

Configures how errors in the input data will be handled:

|

|

Parsing errors |

Specify how to handle input containing invalid JSON that cannot be processed. The Issue warning option is not available for this type of error. |

|

Conversion errors |

Specify how to handle input data that cannot be correctly converted to its specified data type (such as converting "Jane Smith" to a Date). |

|

Truncation errors |

Specify how to handle input with truncated field values (such as storing "John Smithson" in a Unicode(1) field). |

|

Missing fields |

Specify how to handle input with fields that are missing or contain empty values. |

|

Extra fields |

Specify how to handle input with extra elements that are not defined in the tool's configuration. |

|

Send errors to Message Viewer |

If selected, all error types configured to either Issue warning or Reject record will send warnings to the Message Viewer. The Report warnings option must be selected on the Execution tab. |

|

Send errors to Error Report connector |

If selected, creates an E "Error Report" output connector. All error types configured to either Issue warning or Reject record will send detailed error messages to that connector. Attach a downstream Data Viewer or other output tool to review these messages. |

Multi-doc mode

Data Management's JSON Input tool supports the processing of files and fields containing multiple JSON documents with Multi-doc mode.

Multi-doc mode has four options:

-

Single document

-

Document per line

-

Multi-line documents

-

Map-enclosed array

-

Multi-document mashup

Single document

Each file or field contains a single JSON document. (This is the default option.) For example, both of the following are valid Single document inputs:

A single map:

{

"first": "joe",

"last": "smith"

}

An array of maps:

[

{

"first": "joe",

"last": "smith"

},

{

"first": "mary",

"last": "lamb"

},

{

"first": "george",

"last": "washington"

}

]

Document per line

-

Each file or field contains multiple JSON documents, with one document per line of text.

-

These documents may have trailing commas, and may be enclosed by surrounding array brackets

[]on separate lines. -

This is commonly seen in Twitter feed archives.

For example, all of the following are valid Document per line inputs.

A list of maps:

{ "first" : "joe", "last" : "smith" }

{ "first" : "mary", "last" : "lamb" }

{ "first" : "george", "last" : "washington" }

A list of maps with trailing commas:

{ "first" : "joe", "last" : "smith" },

{ "first" : "mary", "last" : "lamb" },

{ "first" : "george", "last" : "washington" }

A list of maps with surrounding brackets on their own lines:

[{ "first" : "joe", "last" : "smith" }]

[{ "first" : "mary", "last" : "lamb" }]

[{ "first" : "george", "last" : "washington" }]

Multi-line documents

-

Each file or field contains multiple JSON documents, and each document spans more than one line.

-

The documents may be separated by commas.

-

The entire input may be surrounded by array brackets

[], in which case the brackets are ignored and the input is treated as a series of documents. -

In order for the JSON Input tool to find breaks between documents, the input must adhere to this rule. The closing

}of each JSON document must be the first non-whitespace character on the line, and must appear at an indentation level less than or equal to the indentation level of the opening{.

For example, this is a valid Multi-line documents input:

{

"first" : "joe", "last" : "smith"

}

{

"first" : "mary", "last" : "lamb"

}

{

"first" : "george", "last" : "washington"

}

This is a valid Multi-line documents input:

[{

"first" : "joe", "last" : "smith"

},

{

"first" : "mary", "last" : "lamb"

},

{

"first" : "george", "last" : "washington"

}]

And this is a valid Multi-line documents input:

[

{

"first" : "joe",

"last" : "smith"

},{

"first" : "mary",

"last" : "lamb"

},{

"first" : "george",

"last" : "washington"

}

]

However, this is not valid Multi-line documents input, because the closing } of the document does not occur at the beginning of a line:

{

"first" : "joe",

"last" : "smith" }

{

"first" : "mary",

"last" : "lamb" }

{

"first" : "george",

"last" : "washington" }

This is also not valid Multi-line documents input, because the closing } of the document is at a higher indentation level than the opening {:

[

{

"first" : "joe",

"last" : "smith"

}

{

"first" : "mary",

"last" : "lamb"

}

{

"first" : "george",

"last" : "washington"

}

]

Map-enclosed array

Each input file or field contains multiple JSON documents nested inside a single element:

{

"value": [

{

JSON document

},

{

JSON document

},

{

JSON document

}

]

}

Multi-document mashup

Like Multi-line document mode, but documents are simply strung together as a stream, with no newlines. This option accommodates undifferentiated JSON "dumps".

Configure the JSON Input tool

The procedure for configuring a JSON Input tool depends on the data source.

|

If the data source is... |

Do this |

|---|---|

|

A file or files |

|

|

A field or datastream |

This tool will not display an output connector until you have configured the input.

...to read files

To configure the JSON Input tool to read files:

-

Select the JSON Input tool.

-

Go to the Configuration tab on the Properties pane.

-

Select Input from and choose File, and then specify the input file or files. You can use wildcards to configure a single JSON Input tool to read a sequence of files with the same layout and format.

-

Optionally, specify Multi-doc mode:

-

Single document: (default) each input file or field contains exactly one document.

-

Document per line: each input file or field contains multiple JSON documents, with one document per line of text.

-

Multi-line documents: each input file or field contains multiple JSON documents, and each document spans more than one line.

-

Map-enclosed array: each input file or field contains multiple JSON documents nested inside a single element.

-

Multi-document mashup: each input file or field contains a stream of JSON documents, and the documents are not delimited by newlines.

-

-

Define the input format.

|

If you have... |

Do this |

|---|---|

|

A JSON schema for this file already defined in the repository. |

Select Use repository schema, and choose the schema from the drop-down list. |

|

No schema defined for this file. |

Select Analyze |

Output connectors are unavailable until input specifications have been defined.

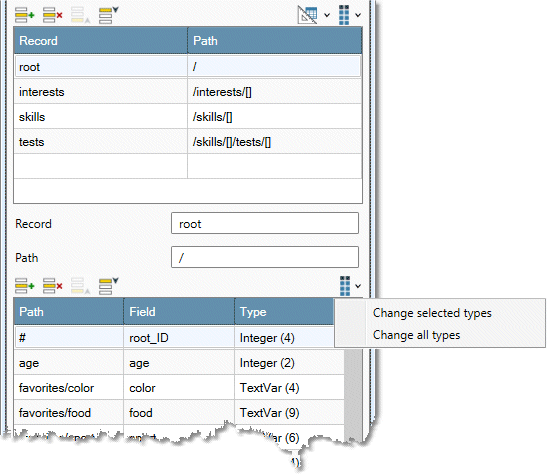

-

Select each cell in the Record column, and examine the fields grid to verify that the schema is correct and the data is accurately described. If a field type is incorrect, select the cell in the Type column, and specify the field's data type and size. You can also use the Change selected types and Change all types commands on the

Linkage fields (ID fields that associate parent and child records) must be of type Integer (4).

-

Optionally, select the Options tab and configure advanced options:

-

To include the name of the input file as a new field, select Produce file name field and specify a Field name and Field size. Select Output full path to include the complete file specification. This can be useful when reading a wildcarded set of files. Select Output URI path to express the complete file specification as a Uniform Resource Identifier.

-

If you don't want to process the entire file, select Limit records and type the desired number of records to process.

-

To produce a single output in which array values are combined into a single field and separated by commas, rather than sent to a separate output as a child relation of the root document, select Flatten value arrays, and then analyze the data.

-

Configure error handling.

-

-

Optionally, go to the Execution tab and Enable trigger input, configure reporting options, or set Web service options.

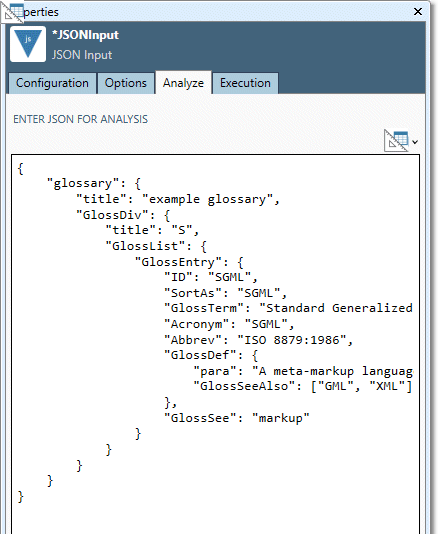

...to read fields

To configure the JSON Input tool to read fields:

-

Select the JSON Input tool.

-

Go to the Configuration tab on the Properties pane.

-

Select Input from and choose Field.

-

Select Commit

-

Specify the Input field from which the data will be read. If you are connecting to an upstream Web Service Input tool, this is

requestBody.

Field data must be binary. If necessary, use an upstream Calculate tool configured with the BinaryRecastFromText function to convert the input data to type binary.

|

If you have... |

Do this |

|---|---|

|

A JSON schema for this file already defined in the repository. |

Select Use repository schema, and select the schema from the drop-down list. |

|

No schema defined for this file. |

Select the Analyze tab and enter a formatted sample of the expected JSON input. Select Analyze

The Analyze tab is only available when Input from is set to Field. |

Output connectors are unavailable until input specifications have been defined.

-

On the Configuration tab, select each cell in the Record column, and examine the Fields grid to verify that the schema is correct and the data is accurately described (see About JSON paths for details). If a field type is incorrect, select the cell in the Type column, and specify the field's data type and size. You can also use the Change selected types and Change all types commands on the

Linkage fields (ID fields that associate parent and child records) must be of type Integer (4).

-

Optionally, specify a Filter for one or more Outputs. This is a Boolean expression to detect variant records within an Output. If specified, the filter must evaluate to

Truefor the record to be output. -

Optionally, select the Options tab and configure advanced options:

-

To include the name of the input file as a new field, select Produce file name field and specify a Field name and Field size. Select Output full path to include the complete file specification. This can be useful when reading a wildcarded set of files. Select Output URI path to express the complete file specification as a Uniform Resource Identifier.

-

If you don't want to process the entire file, select Limit records and type the desired number of records to process.

-

To produce a single output in which array values are combined into a single field and separated by commas, rather than sent to a separate output as a child relation of the root document, select Flatten value arrays, and then analyze the data.

-

Configure error handling.

-

-

Optionally, go to the Execution tab and Enable trigger input, configure reporting options, or set Web service options.

JSON paths

Data Management supports the following JSON Path elements.

|

Element |

Meaning |

|---|---|

|

|

Separator between segment of the path (for example, A/B/C). Each segment represents a level in the JSON object nesting denoted by |

|

|

Indicates the start an array level. |

|

|

A placeholder for a globally-unique generated ID for the output record. |

|

|

A placeholder for the parent record's ID. |

For example, this formatted JSON would be decomposed into the following relational model with a Parent record and a Child record.

{

"firstName": "John",

"lastName": "Smith",

"age": 25,

"address": {

"streetAddress": "21 2nd Street",

"city": "New York",

"state": "NY",

"postalCode": "10021"

},

"phoneNumber": [

{

"type": "home",

"number": "212 555-1234"

},

{

"type": "fax",

"number": "646 555-4567"

}

]

}

Parent record

Path: /

Values found at various field paths

|

Path |

Contents |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Child record

Path: /phoneNumber

Values found at various field paths

First child record

|

Path |

Contents |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

Second child record

|

Path |

Contents |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|